Progress & Versions

Every step from concept to delivery clearly visualized, with a complete timeline of versions, uploads, and feedback.

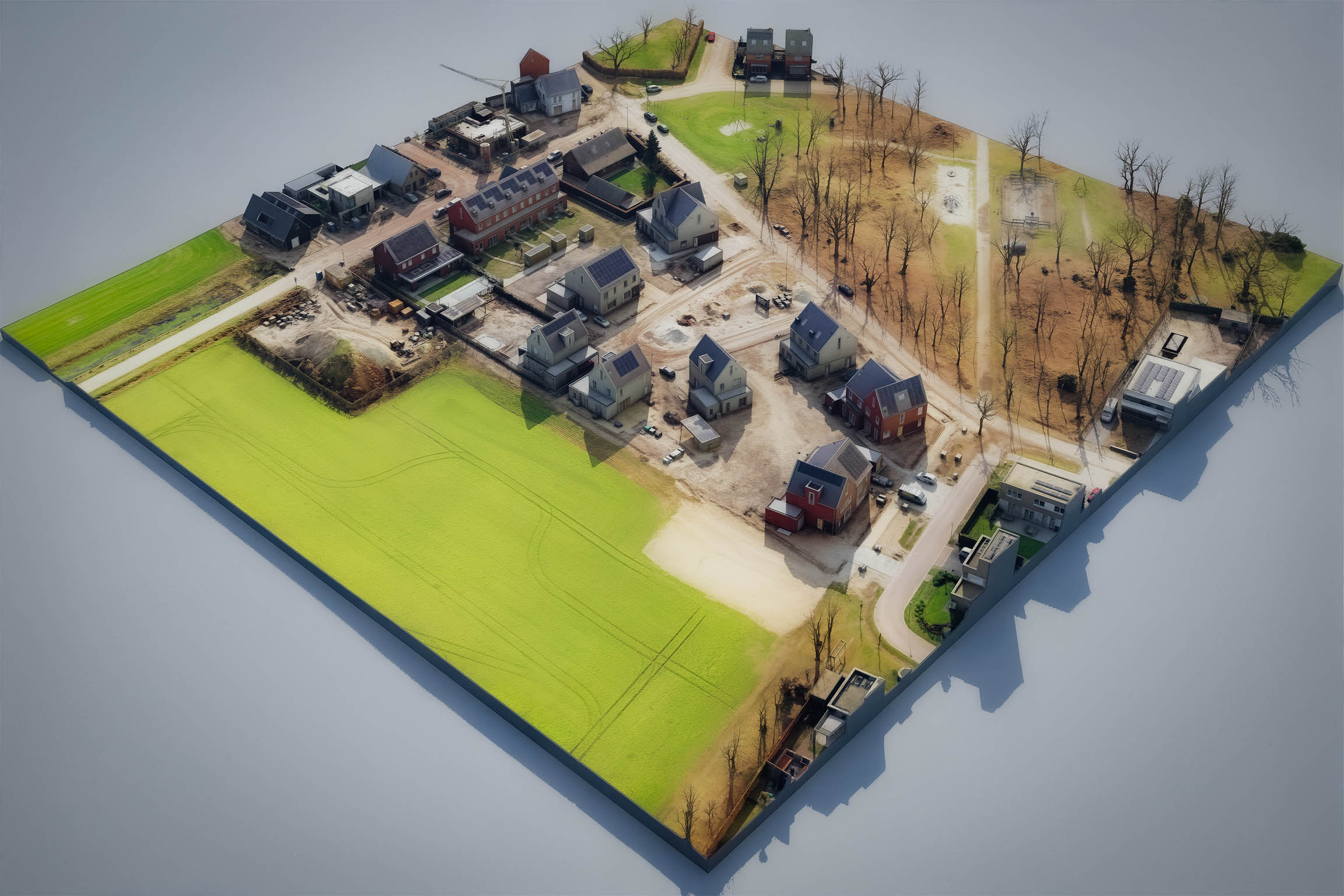

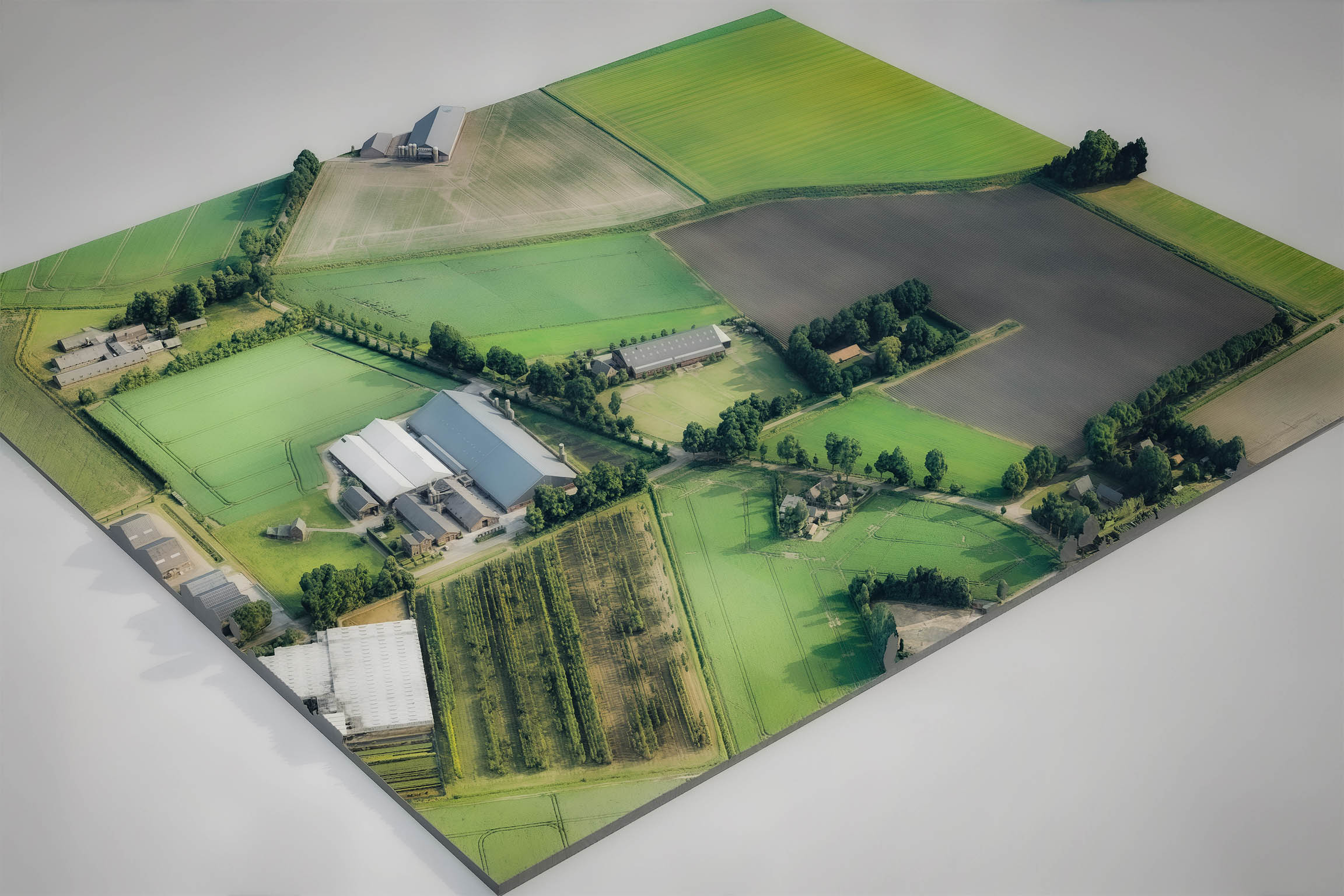

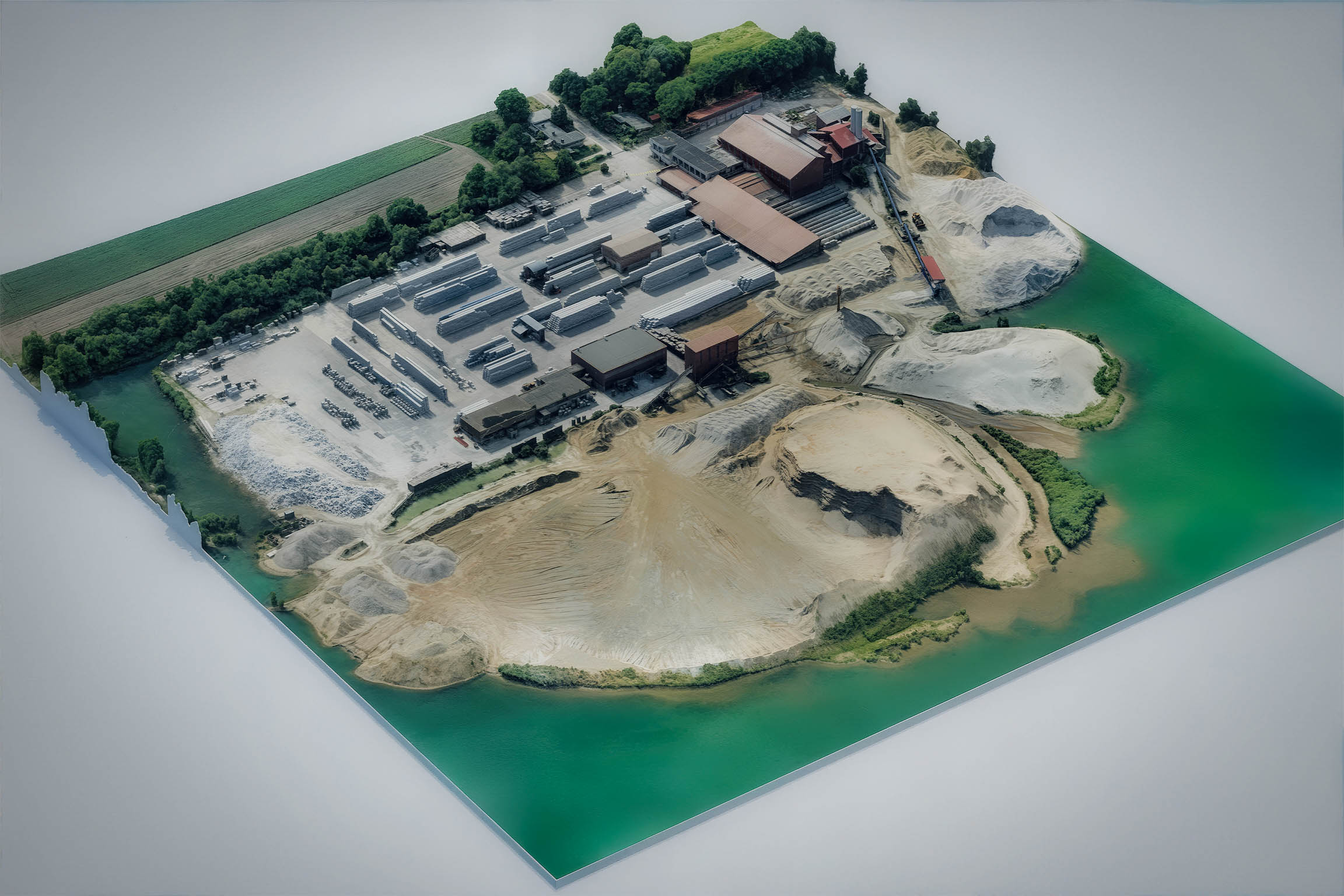

We leverage AI for stunning

realism in 3D and showcase spatial

plans within their existing environment.

AI for stunning realism,

showcasing plans within

their environment.

At Avem3D, we believe in the power of contextual visualization.

By accurately capturing reality with 3D scans and combining this with AI-driven impressions and dynamic animations, we create clear insights and impactful presentations for your project.

View some project impressions here or dive into the detailed categories on our portfolio page.

We capture reality with advanced 3D scanning techniques. Explore the existing situation as a detailed, navigable 3D model.

Photorealistic still images, powered by AI. Transform sketches or simple models into stunning impressions.

Immersive fly-throughs of your project. Dynamic 3D animations offering a realistic virtual experience for presentations.

scroll through project impression gallery

We believe in clear communication and complete control for our clients. Via the Avem3D Client Portal, you can easily navigate your projects, manage all high-resolution assets, and provide direct feedback on specific visualizations.

Easily navigate projects, manage assets, and give direct feedback via the Avem3D Client Portal.

Progress is always visible, and you can easily download products or securely share them with your own stakeholders. Experience the convenience of one clear project environment.

Always visible progress: download or securely share products and experience the convenience of one clear project environment.

Every step from concept to delivery clearly visualized, with a complete timeline of versions, uploads, and feedback.

Access a personal dashboard, and organize all delivered 3D models, videos, and project documents in one place.

Your project data is safe. Access via a personal account, TLS-encrypted connection, and secure cloud storage.

Generate secure links with one click, and safely and easily share access or assets with business partners or stakeholders.

At Avem3D, we closely follow the technological forefront and share our knowledge with you here. Discover how AI and new visualization techniques are changing the way we design, conceptualize, and experience. At Avem3D, we closely follow the technological forefront and share our knowledge with you in this section.

In this section, we share monthly sharp analyses and hands-on examples of the latest AI, scanning, and visualization techniques for the built environment. No company updates, but practical insights that accelerate and enrich your design process. Here we post monthly sharp analyses and hands-on examples of the latest AI and visualization techniques for the built environment.

Today, generative AI has placed a revolutionary new instrument into our hands. These tools can deliver fresh pictures in seconds, but without clear direction their output is a roulette wheel. The truly game-changing results don't come from hitting a "generate" button and hoping for the best, but from skillfully directing the technology to produce predictable, professional-grade, and context-aware visuals. This skill, a blend of architectural language and technical precision, is prompt engineering.

This guide is your comprehensive playbook. We will demystify the art and science of prompting, moving you from a passive user to an informed director of AI. First, we’ll explore the "why"—how these models interpret our words. Next, we'll survey the "what"—the 2025 toolkit of top image generators and their specific strengths in an architectural workflow. Finally, we will dive deep into the "how," deconstructing the anatomy of a master prompt and revealing the advanced techniques that will empower you to transform any design concept into a compelling, high-fidelity image.

Why does a long, detailed prompt consistently outperform a short, vague one? The answer isn't about feeding the AI more words for the sake of it. It's about understanding how these models "think" visually. To move from creating random pictures to directing precise architectural visualizations, we first need to look under the hood.

Imagine the AI starts with a canvas full of random static, like an old TV with no signal. Your prompt is the set of instructions it uses to turn that noise into a coherent image. Here’s how it works in simple terms:

First, the AI translates your words into a set of precise digital instructions (think of them as GPS coordinates for ideas). Then, the model begins to refine the static in a step-by-step process. At each step, it checks its work against your instructions, asking itself: "Does this emerging shape look like the 'Apartment complex' I was asked for? Does this texture I'm forming match 'rough-cast concrete'?".

This entire process happens within what’s called a latent space (a vast, multi‑dimensional map of every visual style, object, and concept the AI has ever learned). Your prompt's coordinates guide the AI to a specific destination on this map.

• A vague prompt like "a modern house" leaves the AI wandering in a huge, generic region of the map, resulting in an averaged, often uninspired, image.

• A long and specific prompt provides a precise location, guiding the AI-model exactly where to go. This is leading the AI to a much more focused and intentional result.

By understanding this fundamental relationship, you shift from making a hopeful request to giving a clear, technical briefing. You are directing the creative process, ensuring the final image is not a product of chance, but a direct reflection of your design intent.

With a foundational understanding of how prompts work, the next step is choosing the right tool. The AI image generation landscape is evolving at a breakneck pace, but as of July 2025, four key players stand out for their power, flexibility, and relevance to architectural workflows.

1. Midjourney

Renowned for its exceptional artistic and cinematic quality, Midjourney is the go-to for early-stage conceptual design and mood boards. It excels at generating evocative and inspiring visuals with a distinct, illustrative style. Use it to explore formal or expressionist visual styles or to create high-end conceptual imagery that sparks client conversations, but expect less precise control over fine-grained details compared to other tools.

2. ChatGPT-4o (Integrated Image Generation)

The primary strength of OpenAI's latest model is its natural, conversational interface. This makes it ideal for rapid brainstorming, allowing you to refine images with simple follow-up requests like, "now make the cladding from weathered steel." It's best for generating a wide range of reference images or when speed is a higher priority than fine-tuned artistic control, as it lacks parameters for ensuring perfect consistency across a series.

3. Stable Diffusion

As an open-source model, Stable Diffusion is the power-user's choice for deep workflow integration and precision control. Its ecosystem of tools like ControlNet allows for high-precision renderings based on input sketches or CAD linework. Because it can be run locally, it's the most secure option for confidential projects. This flexibility comes with a steeper learning curve, but it is the unparalleled solution when custom visual styles and ultimate control are non-negotiable.

4. Flux AI

A powerful newcomer, Flux AI's architecture is built for high-fidelity output where accuracy and continuity are paramount. Benchmarks show its prompt-to-pixel accuracy rivals or exceeds its competitors. Its "Kontext" model is particularly relevant, allowing you to use reference images to maintain façade or character consistency while making precise local changes. With variants for cloud use or local hosting, it's an excellent choice for mid-to-late-stage visualizations, such as façade studies or stakeholder iterations where material changes must be tracked without reworking the entire scene.

Crafting an effective prompt is not about finding a single, elusive formula. Instead, it’s about using a flexible framework, assembling key components to transform a vague idea into a clear, actionable instruction for the AI. Understanding these core building blocks is fundamental to mastering AI-assisted design visualization. Each element adds a layer of specificity and control, allowing you to guide the AI with the precision of a design brief. Below are the five essential components of a master prompt for architectural visualization.

This is the anchor of your prompt—the "what." It defines the primary focus of the image. A clear subject is the single most important element for a relevant result. Always start here.

• Simple: "A building"

• Specific: "A modern, single-family house with a flat roof and large cantilevered sections, featuring a central atrium and green terraces."

This component defines the visual language and emotional impact of the image. It's where you specify the design's heritage, the materials, and the desired rendering technique.

• Architectural Styles: Use keywords to guide the AI towards specific design movements.

Examples: Brutalist, Deconstructivist, Bauhaus, Japanese Minimalist, High-Tech, Art Deco.

• Rendering & Artistic Styles: Specify the desired final look and level of abstraction.

Examples: photorealistic, cinematic, schematic drawing, watercolor sketch, concept art, matte painting.

• Materials: Mentioning key materials is crucial for grounding the image in reality and conveying texture.

Examples: weathered steel, polished concrete, reclaimed timber, glass curtain wall, terracotta tiles.

This is where you direct the AI's "camera," dictating how the subject is presented to influence the viewer's perspective and focus.

• Camera Angles & Perspective: Control the vantage point.

Examples: eye-level view, bird's-eye view (aerial shot), drone shot, low-angle shot, isometric projection.

• Camera Shots: Define the proximity to the subject.

Examples: wide shot, medium shot, close-up, detail shot.

• Aspect Ratio: While often a separate parameter, mentioning the desired format in natural language can influence the composition.

Examples: "A wide 16:9 cinematic shot," or "A vertical 3:4 detail shot of the facade."

Lighting is pivotal for setting the mood, defining form, and enhancing realism. This component allows you to specify the time of day, weather, and quality of light.

• Time of Day & Natural Light: This has a dramatic effect on the mood and color palette.

Examples: golden hour, sunrise, midday, twilight, blue hour, night.

• Weather & Atmospheric Conditions: Add another layer of realism and emotion.

Examples: overcast sky, bright sunny day, foggy morning, dramatic storm clouds.

• Light Quality: Describe the nature of the light itself.

Examples: soft diffused light, strong directional light creating deep shadows, soft ambient interior lighting.

These are specific keywords and commands that influence the AI's rendering engine, pushing the output quality and detail to a professional standard.

• Output Quality & Detail: Signal your desire for a high-resolution, polished look.

Examples: 8K, 4K, ultra-high definition, intricate details, sharp focus.

• Render Engine Emulation: Prompt the AI to mimic the specific look and feel of popular rendering engines. This is a powerful trick for achieving photorealism.

Examples: Photorealistic, Architectural render, V-Ray, Cinematic lighting.

Moving from a simple "generate" button to a structured, multi-faceted prompt is the key to unlocking the true potential of AI image generation. As we've seen, crafting a successful prompt is not about guesswork. It is a deliberate design process. By understanding the 'why' of latent space and choosing the right tool for the job, you can begin to direct the AI with intention.

The five core components (Subject, Style, Composition, Lighting, and Technical Parameters) are the building blocks of your new design brief. Think of them not as a rigid formula, but as a flexible framework for communicating your vision to a powerful new collaborator. Each keyword you choose, from "Wooden Facade" to "golden hour," helps steer the output from a random guess to a predictable and professional-grade visual.

This technology does not diminish the role of the architect, but elevates it. The creative vision, the understanding of space, and the specific design intent remain uniquely human. AI is the instrument, but you are the director. By mastering the art of the prompt, you can now translate your ideas into compelling, high-fidelity imagery with more speed, clarity, and creative freedom than ever before, ensuring your best work is not just imagined, but vividly and accurately seen.

Sources:

Scroll any social feed today and you'll find breathtaking AI videos: hyper-realistic drone shots of untouched landscapes, cinematic journeys through historical eras, and fantastical creatures moving through dream-like worlds. They stop you mid-scroll precisely because they move.

But for architects and landscape designers, the brief is far more demanding. Your building cannot melt when the camera pans. Geometry must remain rigid, materials must stay truthful, and the space must read in three dimensions, not as a psychedelic morph. Most generative video models still struggle with this fundamental spatial discipline, producing jarring inaccuracies: the 'morphing façade,' the sudden appearance of nonsensical structural elements, or flickering details that instantly shatter the illusion of a real, buildable project.

This guide zeroes in on what actually works today, where the professional red lines are drawn, and provides an actionable workflow you can use on your next concept presentation.

The most potent and practical use for AI video in an architectural workflow today is to start with a strong still image, whether an AI-generated concept or a final beauty render, and breathe subtle life into it. This simple act of adding drifting clouds, a slow camera push-in, or animated human silhouettes is invaluable for conveying instant atmosphere, making it perfect for powerful social media teasers and compelling concept decks. Beyond presentation, this technique becomes a design tool in its own right, enabling rapid mood-testing by visualizing the same massing under a sunrise versus a rainy dusk in minutes, not hours. Ultimately, this all serves one crucial goal: generating client excitement by selling an emotional vision far more effectively than a static slide ever could.

However, understanding the tool's limitations is just as important as recognizing its strengths. It is crucial to know when not to use it: if you have an existing, fully-modelled scene in Unreal Engine, Twinmotion, or V-Ray, traditional rendering remains the non-negotiable standard for accuracy and frame-rate. In this context, think of AI video as a powerful concept amplifier, not a production renderer.

This is a snapshot of the current landscape, just what matters for architects: coherence, control, and output quality.

⦁ Premium Leaders (Highest Fidelity & Spatial Consistency)

Runway Gen-4: A mature web/iOS tool with advanced camera sliders and a "director mode" for ensuring shot-by-shot consistency.

Midjourney V7 (Video): Noted for exceptional style fidelity that perfectly matches its renowned still-image engine, making it ideal for creating "living concept art."

Kling AI 2.1: Impressive 3D reasoning and a "motion-brush" for object-level control. It produces some of the most stable façade lines and believable camera moves on the market.

A Note on Google Veo3: While publicly accessible and technically powerful, it currently lacks a direct image-to-video workflow. This makes transforming your hero render into a controlled shot impractical for architects today.

⦁ The Power-User's Path (Granular Control, Steep Curve)**

Stable Diffusion + AnimateDiff / ComfyUI: For the expert with a local GPU. This route allows you to wire in depth maps, ControlNets, and precise CAD silhouettes for absolute frame-level authority. Expect to tinker with node-graphs, but the pay-off is unmatched control over the final output. But be aware of a steep learning curve.

⦁ Mid-Tier & Experimentation Tools (Fast Iterations, Lighter Polish)

Pika Labs, Haiper Pro, Luma Dream Machine: These are excellent, accessible platforms for rapid exploration. Luma's Dream Machine is particularly adept at inferring believable dolly moves from a single still, though it offers fewer explicit controls than the premium leaders.

At Avem3D, we find the best results come from a hybrid approach that leverages the unique strengths of different systems. For projects demanding the highest degree of precision, we turn to the Stable Diffusion + ComfyUI path, which allows us to align video output with exact architectural data. For assignments where speed and stylistic coherence are paramount, we rely on the high-fidelity output from premium leaders like Kling AI and Runway. While the new video model in Midjourney V7 is only days old and shows immense promise, we have not yet integrated it into our production workflow at the time of writing.

1. Start with a Flawless Seed – Your Still Image is Everything.

Export or generate your starting image at the final aspect ratio you intend to use. Ensure it has a clean horizon line and fully resolved entourage elements, because the AI can only animate what it can see. Any movement beyond the original frame will force the AI to hallucinate new and often nonsensical architectural elements, instantly destroying the design's integrity.

2. Direct Modest, Deliberate Motion.

A common mistake is vague prompts. A successful video prompt consists of two parts. First, you must accurately describe the key elements in your still image to anchor the AI's understanding. For example: "A photorealistic, high-resolution image of a modern, timber-clad cabin with large glass windows, nestled in a misty pine forest at dusk." Only after this detailed description do you add a single, clear camera command. Reliable prompts include: a slow push-in, a gentle upward tilt, a slow sideways movement, or a clockwise orbit. Stick to one simple motion per generation for the best results.

3. Iterate, Select, and Upscale.

Run multiple generations from the same prompt and seed; select the one with the least warping or flickering. Pass this chosen clip through a dedicated tool like Topaz Video AI for sharpening, denoising, and upscaling to a higher resolution.

4. Apply the Professional Polish in Post-Production.

Finally, import the upscaled clip into a professional editor like DaVinci Resolve, Premiere Pro, or Final Cut. This is where you perform a final color grade and use advanced features to perfect the timing and length of your clip. For example, you can slow down the footage without creating jitter by using DaVinci Resolve’s powerful frame interpolation, which intelligently generates the missing frames with AI. Alternatively, if a clip feels slightly too short, Premiere Pro’s ‘Extend Video’ feature can use AI to seamlessly add a few extra seconds. These techniques provide maximum control before you trim the footage into a perfect 5- to 10-second shot, and combine all the shots you created.

This meticulous workflow is a direct response to the technology's core limitations. From façades that defy physics (spatial incoherence) to 'hallucinated' details that alter a design, these issues are inherent to current models. They underscore why a controlled, image-first approach with simple camera moves is not just a best practice—it's a necessity for achieving a usable, professional result.

These technical realities lead to a crucial professional responsibility: transparency. It is vital to frame these AI-generated videos correctly when presenting to clients. Explain that they are conceptual tools designed to evoke mood and atmosphere, not to serve as a precise representation of the final, buildable design. Being upfront that the video is created with AI and may contain minor artistic interpretations manages expectations and reinforces its role as a source of inspiration.

AI video has definitively reached the point where AI can turn a static concept into a memorable micro-experience. Perfect for early design mood boards, social media reveals, and client "wow" moments. Yet, it is equally clear that the same technology is not ready to replace traditional, physically accurate walkthroughs from dedicated 3D software.

The gap between a raw AI output and a professional-grade video is bridged by expertise. If wrangling seeds, upscalers, and post-production isn’t on your agenda, Avem3D can handle that heavy lifting. We combine deep architectural understanding with bespoke AI prompting and rock-solid editing to deliver clips that inspire without warping. Let’s bring your vision to life.

This article deviates slightly from our usual direct focus on spatial development technology to explore a foundational issue impacting all industries, including our own: the gap between AI's rapid development and its slower real-world adoption.

AI is everywhere, constantly making headlines with its astonishing advancements. Yet, if you look closely, its widespread implementation often lags behind its breathtaking potential. Why aren't more firms fully automating core processes? Why do so many powerful AI tools, promising efficiency and innovation, gather dust on the shelf?

The answer might not lie in the technology itself, but in something far more fundamental: human psychology. While AI models race ahead in capability, deeply ingrained human biases regarding trust, risk, and accountability are creating a bottleneck. This article will explore these often-subconscious roadblocks, illustrating them with real-world examples and research, and revealing why this very friction presents a significant opportunity for those who understand and navigate it.

Consider this: In McKinsey’s 2025 “State of AI” survey, a majority of firms now run AI in three or more functions, yet still only a minority of business processes are automated at all [1]. Furthermore, fewer than one-in-three citizens in many tech-mature countries—including the Netherlands—say they actually trust AI on first encounter, even though they regularly benefit from it behind the scenes. Worldwide, 61% of people admit they are more wary than enthusiastic about AI [14]. These statistics underscore a profound gap between technological readiness and human willingness to adopt it.

Our interaction with AI isn't purely rational; it's heavily influenced by deeply rooted psychological traits. Understanding these subconscious roadblocks is the first step towards bridging the adoption gap.

The Allure of the Familiar: Status Quo Bias & the "Difficult Path" Preference

We, as humans, often prefer the hard road we've walked before, even if a potentially easier, more efficient path exists. This is the "status quo bias"—our instinctive preference for familiar processes, even when they're suboptimal, over uncertain new ones. Change feels like a potential loss, triggering hesitation.

In the architectural, engineering, and construction (AEC) sector, this manifests as a significant resistance to adopting innovative digital tools like Building Information Modeling (BIM), advanced construction management software, or sustainable building techniques. BIM, for instance, delivers fewer clashes, tighter budgets, and cleaner as-builts, yet adoption across AEC markets still crawls [3]. Many teams cling to 2-D drawings because the learning curve feels riskier than the cost of errors they already know [3].

The Need for a Human Face: Trust, Anthropomorphism & Intermediaries

We are wired to trust other humans—faces, voices, and authority figures—far more readily than abstract systems, data, or algorithms. This deeply ingrained preference often dictates our comfort with AI.

Think about a common advertisement: a doctor, even an actor, explaining why a certain toothpaste is better for you. We often find this more convincing than being shown the scientific study itself; it’s the "white-coat effect." The same dynamic dogs AI: controlled experiments show that adding a friendly avatar, voice, or human intermediary triggers a double-digit lift in perceived competence and warmth [4]. While anthropomorphic cues can boost trust, there’s a delicate balance; too human-like can trigger the "Uncanny Valley effect," leading to discomfort if imperfectly executed.

This is why human intermediaries become crucial. While AI excels at automating routine tasks, humans are still preferred for complex, high-value interactions requiring empathy. For example, in real-estate finance, 70–80% of trades on major exchanges are now algorithmic, yet investors keep paying management fees to a human advisor who, in turn, asks the bot for decisions.

The Accountability Imperative: The Blame Game

When an autonomous shuttle grazes a lamp-post, global headlines erupt; when a human driver totals a car, that’s just traffic. We have a fundamental psychological need to assign blame when things go wrong. This becomes profoundly problematic with AI, where there isn't always a clear "person" to point fingers at, creating a "responsibility vacuum."

Psychologists call this the moral crumple zone: in a mixed system, the human operator becomes the convenient scapegoat even if the machine did most of the driving [7]. Directors fear that “nobody gets fired for not using AI,” but a single AI-related mishap could end careers [48]. Research shows that if an autonomous system offers a manual override, observers tend to place more blame on the human operator for errors, even if the AI is statistically safer [10]. When AI fails in service, blame often shifts to the service provider company that deployed the AI [15].

This inherent need for accountability poses a significant challenge for AI adoption. Until legal liability frameworks mature (as seen with the EU AI Act draft and UK autonomous vehicle insurer models [24, 25]), boards will often default to human-centred processes they can litigate. This creates an opportunity: build services that absorb this anxiety, offering insured, audited AI workflows so clients can point to a responsible intermediary when regulators come knocking.

The Shadow of Loss: Loss Aversion & Unfamiliar Risks

One visible AI error erases a thousand quiet successes. One of the most potent psychological principles hindering AI adoption is loss aversion: the idea that people strongly prefer avoiding losses to acquiring equivalent gains. The pain of a potential loss from AI—whether it's perceived job displacement, a disruption to familiar workflows, or an unfamiliar technical failure—often feels more salient than the promised benefits.

Humans tend to overestimate the likelihood and impact of rare but catastrophic events, a cognitive bias known as "dread risk" [53]. Even if statistics show AI systems outperform humans on average, the possibility of an unknown type of failure can deter adoption [54]. Hospitals, for instance, may hesitate to deploy diagnostic AIs that outperform junior radiologists because the image of an AI-caused fatal miss looms larger than the everyday reality of human oversight failures. This loss aversion is reinforced by managers' fears of being held accountable for AI failures, making the familiar, even if riskier, human process feel safer [48].

These psychological hurdles are not insurmountable. In fact, they create a significant, often overlooked, economic and professional opportunity for those who understand and are prepared to bridge this human-AI gap.

The Rise of the "AI Navigator" & the "Middle-Man Economy":

The very friction caused by human hesitation is spawning a new category of professionals: the "AI middle-man." These are not roles destined for replacement but individuals and firms who capitalize on the persistent need for human oversight, interpretation, and strategic guidance in AI implementation. They become the trusted "face" that guides others in using AI or delivers enhanced services that clients trust because they trust the human provider.

This "Human-in-the-Loop" (HITL) market is experiencing explosive growth. Analysts peg the prompt-engineering market at US $505 billion next year, racing toward US $6.5 trillion by 2034, reflecting a 32.9% CAGR [Perplexity Report, 2]. This exponential growth confirms that human expertise in judgment, ethics, and adaptation remains crucial for successful AI adoption, contradicting early predictions of widespread displacement. Roles like AI consultants, prompt engineers, and ethical AI oversight specialists are not temporary; they are foundational elements of the emerging "human-AI bridge economy."

Strategies for Building Trust and Accelerating Adoption:

For professionals in any field, becoming an "AI Navigator" means adopting strategies that align with human psychology:

Our own psychology – the fears, biases, and heuristics we bring to new technology – is often the toughest hurdle in AI adoption. The evidence is clear: trust underpins every major barrier. When people trust an AI system, they are willing to use it; when they don't, progress stalls.

However, this is not a cause for despair but an invitation to lead. The very human biases that slow broad AI adoption simultaneously create a critical market niche. For professionals in spatial development, architecture, and design, this is a profound opportunity. You can bridge the human-AI gap, turning skepticism into confidence, and ultimately, unlocking AI’s immense potential not just for efficiency, but for truly impactful and ethical innovation. The future belongs to professionals who understand that the real frontier isn’t smarter machines—it’s calmer minds.

Sources:

Imagine presenting a stunning architectural design... floating in a digital void. Or perhaps placed against a generic, blurry backdrop that vaguely resembles the project site. While the design itself might be brilliant, the lack of authentic surroundings leaves stakeholders guessing. How does it truly relate to its neighbors? What impact will it have on the streetscape? Does it respect the existing environment? In today's visually demanding world, designing and presenting projects in isolation is no longer enough.

The solution lies in embracing accurate visual context capture. Modern reality capture technologies, particularly high-detail photogrammetry and drone scanning, allow us to create rich, visually faithful digital replicas of a project's site and its crucial surroundings. This isn't just about technical measurement; it's about building a foundational understanding – a visual digital twin – that transforms how we design, communicate, and ultimately, gain acceptance for our projects. This article explores why investing in capturing this visual reality is becoming indispensable for architects, urban developers, and landscape designers.

Visual context capture prioritizes faithfully representing the look and feel of a project's environment. Using techniques like drone or ground-based photogrammetry, we capture hundreds or thousands of overlapping images. Specialized software then processes these images to generate detailed 3D models (meshes or dense point clouds) that accurately reflect the real-world textures, colors, ambient light, complex shapes of vegetation, and intricate facade details of the site and its adjacent properties. While technical accuracy is inherent, the primary goal here is visual fidelity – creating a realistic digital stage upon which new designs can be confidently placed and evaluated.

Integrating accurate visual context into your workflow offers profound advantages:

The modern visualization workflow often begins with reality capture. High-resolution photos are gathered using drones and ground cameras. This data is processed using photogrammetry software (like RealityCapture or Metashape) to generate a detailed 3D mesh or point cloud of the site and surroundings. This foundational context model is then imported into standard design and visualization software (Revit, Rhino, 3ds Max, Blender, Lumion, Twinmotion, Unreal Engine). The proposed architectural or landscape design model is accurately positioned within this context. From there, stunning visual outputs are created – static renders, immersive animations, interactive web viewers, or VR/AR experiences. The key is that the realism and accuracy of the final visual are directly built upon the quality of the initial context capture. This captured data can also serve as the high-fidelity input for advanced representation techniques like Gaussian Splatting, pushing visual boundaries even further.

The Risks of Ignoring Visual Reality

In the complex world of contemporary design and development, accurately capturing and utilizing the visual context of a site is no longer a luxury; it is a fundamental necessity. It provides the essential grounding for designs that are aesthetically sensitive, contextually appropriate, and communicatively powerful. Investing in high-fidelity reality capture pays dividends in better design decisions, smoother approvals, and more persuasive presentations.

As technology continues to advance, this foundation is becoming even more powerful. Real-time rendering engines are now adept at handling massive, city-scale models, while AI is being explored to enhance the realism of captured data. Sharing these rich, contextual scenes via web platforms and immersive VR/AR headsets is making collaboration and stakeholder engagement more dynamic and accessible than ever. For professionals aiming to bring successful projects to life, the path forward is clear: anchor every vision in reality.

Sources:

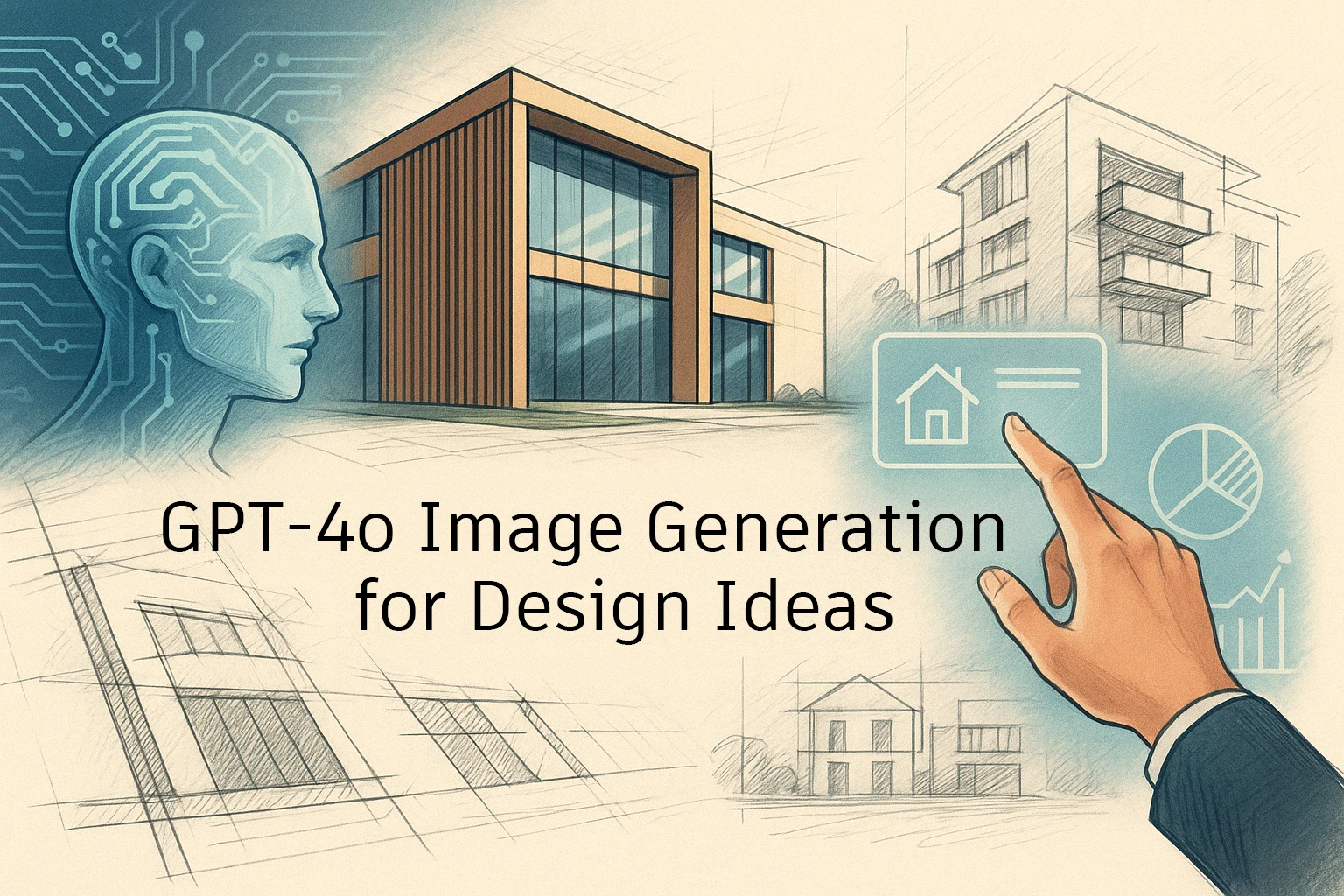

In the fast-paced worlds of architecture, urban development, and interior design, the pressure to visualize ideas quickly and compellingly is constant. Turning abstract concepts, client feedback, or initial sketches into tangible visuals often involves time-consuming modeling or rendering, especially in the early stages. While AI image generation tools have emerged rapidly, the latest advancements within ChatGPT itself, powered by the new GPT-4o model, signal a potentially significant shift – offering designers an integrated, conversational, and surprisingly capable visual assistant.

Announced recently, GPT-4o isn't just a minor update; it includes dramatically enhanced native image generation capabilities. This isn't simply the previous DALL-E 3 model accessed through chat; it's a new, deeply integrated system designed to understand and create images with greater accuracy and nuance. For design professionals constantly juggling ideas and visuals, this integrated power could streamline concept exploration and visual communication like never before.

So, what makes GPT-4o's image generation different? Instead of relying on a separate image model like DALL-E, OpenAI has built image understanding and creation directly into the core GPT-4o "omnimodel." Think of it less like two separate brains talking to each other (one for text, one for images) and more like one highly intelligent brain that can process and generate both seamlessly.

This integrated approach has key advantages. Because the same AI understands your text prompt and generates the image, it leverages GPT-4o's vast knowledge and sophisticated language comprehension. This leads to:

Beyond the technical improvements, how can architects, planners, and designers actually use this new capability in their day-to-day work? Here are some powerful applications emerging:

With various AI image tools available, where does GPT-4o fit in?

GPT-4o's unique strength lies in its deep integration within the ChatGPT environment. It combines powerful language understanding with advanced image generation, enabling a fluid, conversational workflow for visual creation and refinement that standalone tools can't easily replicate.

Know the Boundaries: Limitations for Professional Use

While incredibly powerful, it's crucial for design professionals to understand GPT-4o's current limitations:

The integration of potent image generation like GPT-4o's into widely accessible platforms is set to permanently reshape the design industry. It accelerates ideation by dramatically lowering the barrier to experimentation. It creates efficiency gains by speeding up routine visualization tasks. And it democratizes the field, giving smaller firms access to capabilities that once required specialist teams. To thrive in this new landscape, skills in prompt engineering, critical AI evaluation, and future software integration will become essential.

GPT-4o's advanced capabilities mark a significant milestone. While not a replacement for rigorous design development or the critical judgment of a human professional, it excels as a powerful co-pilot—a catalyst for creativity and a tool for rapid communication. By embracing these evolving tools thoughtfully, understanding both their potential and their limitations, designers can enhance their workflows, explore more possibilities, and bring their visions to life more effectively than ever. For professionals committed to innovation, leveraging this technology is no longer optional; it is essential for staying relevant in the dynamic future of design.

Sources:

Every design project begins inspiration, the search for the core idea that will shape space and experience. This early phase, often characterized by sketching, brainstorming, and grappling with the blank page, is where creativity flourishes. Yet, it can also be where designers feel most constrained by time or convention. Enter generative artificial intelligence (AI). While many associate AI in architecture with producing polished final renderings, its truly disruptive potential might lie much earlier: in the messy, exciting, and fundamentally human act of ideation.

This isn't about replacing the designer; it's about augmenting their imagination. We're moving beyond viewing AI as simply a tool for visualization and beginning to explore its role as a creative catalyst – a partner capable of sparking novel ideas, breaking through conventional thinking, and accelerating the exploration of uncharted design territories.

It’s crucial to distinguish between AI used for final presentation visuals and AI employed during the nascent stages of concept development. The latter operates less like a high-fidelity camera and more like an unpredictable, infinitely prolific sketchbook. As architect Andrew Kudless suggests, an AI-generated image in this context is akin to a rough sketch – valuable for "elucidating a feeling or possibility" but not a resolved design concept in itself.

Why does this distinction matter? Because it shifts the focus from AI as an output tool to AI as a process enhancer. Viewing AI as an exploratory partner allows architects to leverage its unique strengths – speed, combinatorial creativity, and access to vast visual datasets – to enrich their own thinking. It encourages experimentation and embraces the "artificial serendipity," as some call it, that can arise when human intuition guides AI's generative power, leading to ideas that might never surface through traditional methods alone.

De rol van Generatieve AI in het ontwerpproces evolueert razendsnel. 'Image-generation' is niet enkel een tool voor een eindplaatje, maar een actieve co-piloot in de cruciale, conceptuele fase. Deze samenwerking, een dialoog tussen mens en machine, ontsteekt ideeën en voedt de creativiteit op verschillende manieren:

Despite AI's growing power, the human designer remains firmly in control. The most effective use of generative AI involves a collaborative partnership where the architect acts as the crucial curator, interpreter, and director. This new role demands a blend of traditional design sense and new competencies, requiring a nuanced understanding of AI's limitations and potential.

Crucially, the architect provides interpretation and translation. An AI image isn't a blueprint. Its output can be visually stunning but technically unfeasible, lacking an inherent understanding of physics, structure, or construction logic. AI is also typically blind to site specifics, cultural nuances, or zoning laws. It is therefore the architect's rigorous expertise that must ground AI's often decontextualized ideas in reality, assess their feasibility, and translate the most valuable aspects into a tangible design language.

This curation process is vital. Designers must navigate persistent concerns about derivative outputs and the stylistic homogenization that can arise from training data biases. Furthermore, integrating evocative AI images into precise CAD or BIM workflows often requires significant manual translation, presenting new workflow hurdles. This entire process is underpinned by paramount ethical considerations, including issues of authorship, intellectual property, and the need for full transparency with clients regarding AI usage.

In this model, AI functions as a powerful amplifier, a "co-pilot," or "muse." But it is the architect who masters the art of the prompt, provides critical curation, and ultimately maintains the overall vision, acting as the indispensable ethical and creative compass.

Generative AI is undeniably reshaping the landscape of design tools and processes. Looking forward, we can anticipate tighter software integration, potentially enabling AI-suggested geometry or real-time visual feedback within standard CAD/BIM platforms. This will lead to a significant shift in early-phase workflows, with AI-augmented brainstorming sessions becoming standard practice. Consequently, evolving skillsets in AI literacy, prompt engineering, and critical curation will become increasingly vital for designers. The future likely involves a deeper hybrid intelligence, where AI handles rapid exploration and data analysis, freeing human designers to focus on strategic thinking, complex problem-solving, and imbuing projects with meaning and purpose.

The rise of image-generation AI-tools offers more than just a new way to create images. It presents a profound opportunity to rethink the creative process itself in architecture and design. By embracing these tools not as replacements but as catalysts, as partners in exploration, designers can amplify their own imaginative capacity, break free from conventional constraints, and discover novel solutions.

The journey requires thoughtful experimentation, a critical eye, and a commitment to ethical practice. But for those willing to engage, generative AI promises to be a powerful co-pilot, helping navigate the complex, exciting terrain of early-stage design. Understanding and harnessing this potential is key to enriching the quality and diversity of the built environment we create and staying relevant and innovative in the future of design.

Sources: